Computer science professor Marie A. Roch leverages her expertise in machine learning to benefit the marine mammals she was fascinated with as a child, having moved far from the ocean after growing up down the road from SeaWorld in San Diego.

Earlier this year, Roch and her SDSU colleague Xiaobai Liu received additional funding from the Department of Defense Office of Naval Research to analyze the sounds of various dolphins and whales using computer algorithms. Their efforts will help military and commercial ships monitor their impact on marine mammals.

“The thing I really like about this work is that the work I’m doing can have real-life implications on policy and hopefully lead to conservation success,” said Roch. “You can’t protect what you don’t understand.”

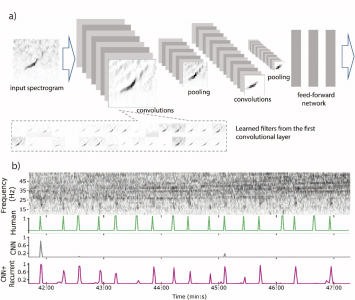

Figure from Acoustics Today illustrating how convolutional and recurrent neural networks detect cetacean calls

The algorithms that Roch and colleagues are creating will be able to determine when cetaceans are in proximity to ships. Determining the different sounds produced by species such as bottlenose dolphins, endangered right whales, and beaked whales can also help biologists better understand animal communication and behavior.

In a paper published in The Journal of the Royal Society Interface this summer, Roch and colleagues describe how adding the ability to pay attention to longer-term patterns in fin whale song enabled a neural network to automatically detect song notes from visual representations of these sounds, called spectrograms. Considering multiple song notes to make predictions enabled the new methods to detect notes missed by previous models, especially in noisier contexts with passing ships and waves.

Next steps include generating synthetic samples to train another network to identify differences between real and generated calls. An undergraduate in Roch’s lab, Peter Conant, is working on translating the code into a form that is more biologist-friendly. He plans to present this work at a conference in March.

And marine mammals aren’t the only creatures aided by Roch’s work. Another international collaboration aims to figure out how meerkats, coatis, and hyenas make group decisions. These three species exist along a spectrum of cooperation, with meerkats rarely operating alone whereas hyenas come together occasionally and then disperse.

The team hopes to return to field work soon, collecting audio and accelerometer data from collars that will be fed into algorithms developed by Roch and her team to classify different types of calls among land mammals and analyze how communication leads to collective decisions.